(1) Evaluate your test results.

What method(s) did you use to evaluate the results of your usability tests?

We first wrote down all our notes. As there weren’t many notes (14 in total), we skipped all more complex evaluation methods and simply discussed all notes in the group. We also used a post-test questionnaire (UMUX) to get feedback from users after the test. The questionnaire consisted of questions regarding their feelings after first contact with the app.

How did you evaluate the results?

We found main points that we all agreed on were relevant in terms of the conducted tests. Those issues were the most problematic for users during the tests. We used them to make final touches on our prototype. We also retrieved positive points to evaluate what went correctly and was met with approval from our users. From all information gathered we extracted the main takeaways and applied them in the prototype.

What did you learn from the testing?

- Overall, users found the app very easy to use (all users surveyed ‘strongly agreed’ to that statement in the post-test survey) and one mentioned it was intuitive.

- All surveyed users disagreed with the statement: Veritas is a frustrating experience

- the info-button was not clickable, but only the text. Two users clicked solely on the button, though, and assumed that it didn’t work

- One user criticized that the onboarding screen (how to use the app) didn’t show the current site and that they had the urge to skip it and jump right into it. This reminds us of heuristic evaluation: showing the system state is part of Nielsen’s heuristic.

Just speaking from usability in the narrow sense, we are fairly confident that the app was easy to use and well designed.

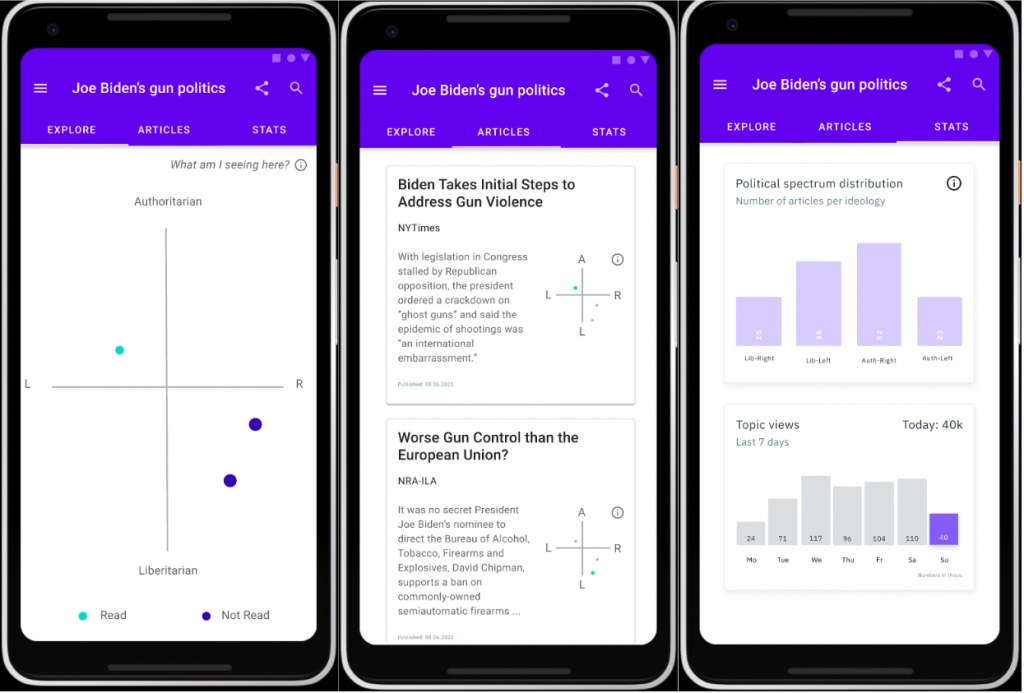

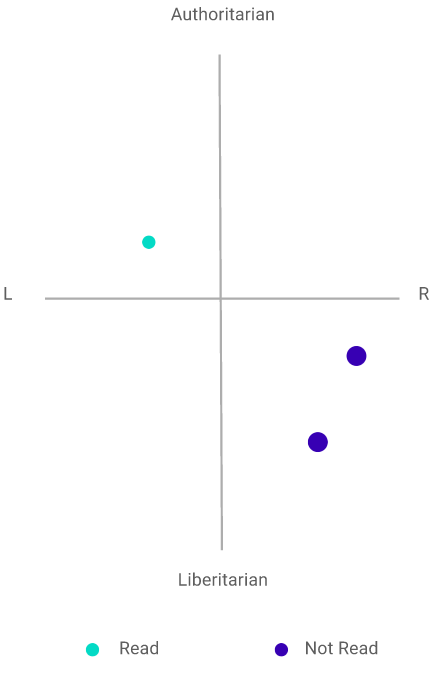

However, many users doubted the usefulness of the political compass. Many users wished for an explanation in regards to how articles are positioned. One user rejected the idea of the political compass altogether. Alternative solutions suggested were to show more “political dimensions” (in a radar chart). We feel that this would hinder the interpretability even more though. Still, one user explicitly and without us asking mentioned that they’d use the app. Another also said he would maybe use it.

The testing itself was quite relaxed and the participants were well-informed in political topics and, as such, very interested in our app. This made for a good user group. The tasks didn’t quite fill their purpose. The users usually only worked for about 1 or two minutes per task. The thinking-aloud part was not done consistently. When asked about their opinion afterwards though, the users were eager to give additional feedback.

What are your main takeaways?

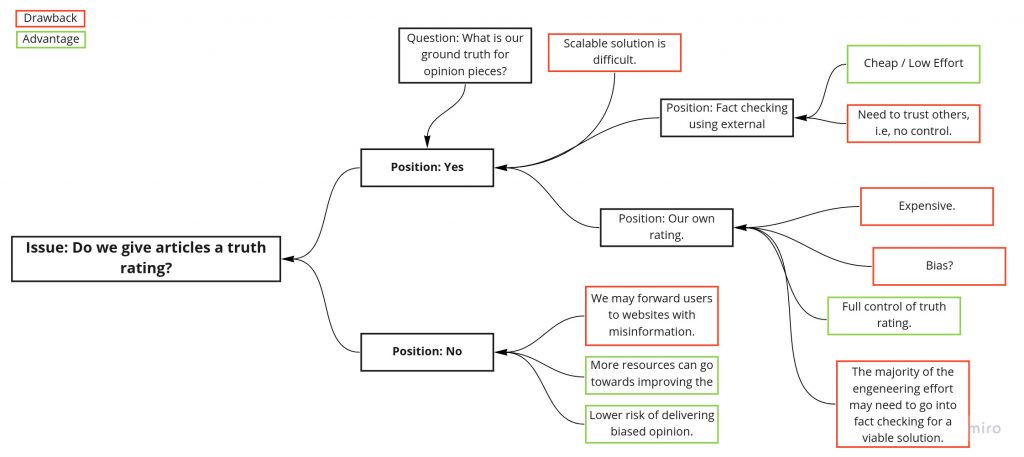

- The political compass – our key feature in some sense – is controversial.

- All participants questioned on which factors the articles get placed on the political compass. We should portray this information in our App.

- UX-wise we did a fairly good job 🙂

(2) Project description

Prototype:

Unique Part:

Name: Veritas – Escape your filter bubble today

Group Members: Arne, Clemens, Daniel, Mateusz

Project Description: Today’s news is often inherently biased and lacks nuanced journalism. Some more, such as breitbart news, and some less, such as reuters. With the recent surge of social media, a large share of unsuspecting readers fall into a so called filter bubble, i.e., they only see news of specific political backgrounds, which they are anticipated to like. Some think, this may lead to political extremism, further deepening preconceived opinions and ideas.

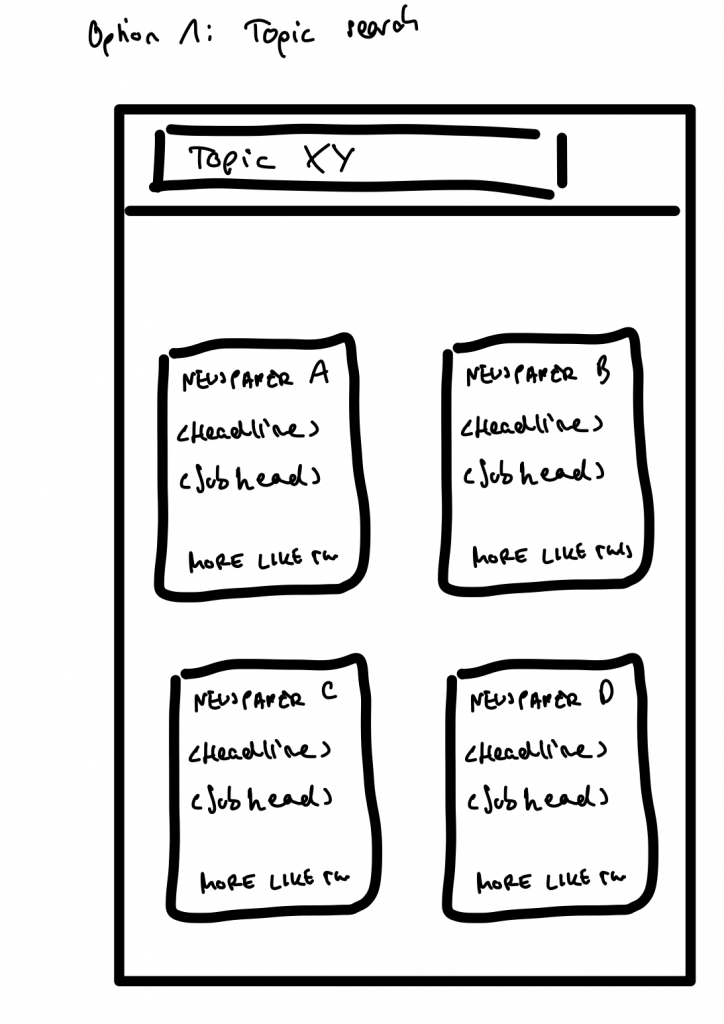

Veritas is a tool that makes it easy for users to explore opinions and articles in all corners of the political spectrum. You give Veritas a topic and it presents you a large collection of articles on the given topic that cover a diverse set of published viewpoints.

Final Prototype: https://www.figma.com/proto/xqMeWV6kxUEShVbQSxePjD/THE-INVINCIBLE?node-id=167%3A178&scaling=scale-down&page-id=0%3A1

(3) Reflection

Who did what?

Arne, Daniel, and Mateusz evaluated the test results. Clemens wrote the project description and reflection.

What did we learn?

We learned about the Smartspider which is a different type of political compass.

What went well?

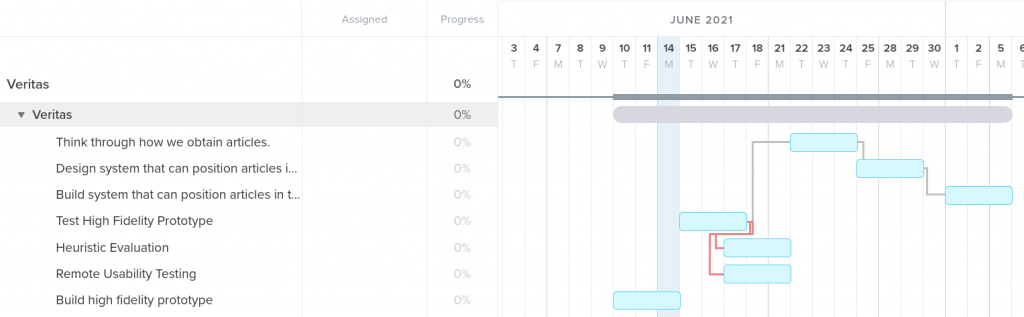

We split up the tasks well and everything was on time.

What can be improved?

When we have time, we consider improving our prototype further because it still lacks some realism.