By Vicente Sandoval

http://dx.doi.org/10.17169/refubium-45258

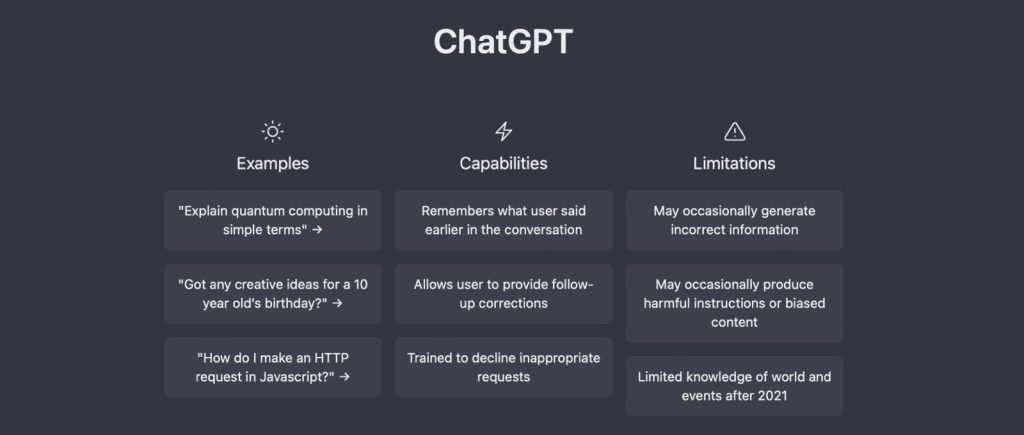

Many researchers are concerned these days regarding the potentials and risks of using Artificial Intelligence (AI), especially language models, in research. Then, I asked ChatGPT “Create an opinion column about the potentials and risks of using ChatGPT in disaster research as if you were Vicente Sandoval”. Here is its opinion (my own reflections at the end):

As a language model, I believe that the use of ChatGPT in disaster research could have both significant potentials and risks. On the one hand, ChatGPT’s ability to understand and process language could help researchers to quickly analyze and make sense of large amounts of data related to disaster events. This could be especially useful in situations where time is of the essence, such as in the aftermath of a disaster*, when decision-makers need to act quickly to save lives and minimize damage.

Furthermore, ChatGPT’s ability to generate language could also be helpful in disaster research. For example, it could be used to generate simulations of disaster events, which could help researchers to understand how different factors (such as building design, evacuation plans, and emergency response protocols) might affect the outcome of a disaster.

However, there are also several potential risks associated with the use of ChatGPT in disaster research. One concern is that ChatGPT could introduce bias into the analysis of disaster data. As with any machine learning model, ChatGPT’s output is only as good as the data it is trained on. If the data used to train ChatGPT is biased (for example, if it contains stereotypes or discriminatory language), then ChatGPT’s output may also be biased.

Another risk is that the use of ChatGPT in disaster research could contribute to the spread of misinformation. ChatGPT is capable of generating convincing text, which could be used to spread false information about disaster events. This could have serious consequences, as it could lead people to take actions that put themselves or others at risk.In conclusion, while the use of ChatGPT in disaster research has the potential to be incredibly valuable, it is important to proceed with caution. Researchers should be aware of the potential risks associated with using ChatGPT, and take steps to mitigate those risks (such as ensuring that the data used to train ChatGPT is diverse and unbiased, and carefully reviewing all output generated by ChatGPT). By doing so, researchers can harness the power of ChatGPT to advance our understanding of disasters and improve our ability to respond to them.

My reflections

Impressed? Depressed? What do you think? ChatGPT represents a milestone in the advancement of artificial intelligence (AI) in terms of natural language generation and processing. It can develop natural language responses and adapting to the tone and style of the queries it receives, creating convincing human-like answers. Also, ChatGPT has an impressive ability to process large amounts of data, which make it an invaluable tool for those looking to extract insight from large data sets.

Nonetheless, this also represents some challenges and risks. First, convincing natural language makes AI a potentially dangerous tool for generating fake news and spreading misleading information. Secondly, by automatizing tasks it could displace large numbers of human workers in many industries and sectors.

I recently watched “The Matrix Resurrections” (Wachowski, 2021) where the use of AI was also discussed. In the script, Niobe (Jada Pinkett Smith) introduces Quillion to Neo (Keanu Reeves), a lead digitologist which is also a machine, an AI, capable of transform the digital code from the Matrix into DNA sequences to growth (real) strawberries. A machine helping human rebels in the dystopian Matrix world? Yes.

In the film, Niobe shows to Neo the new city named Io, the one built after the former city Zion was destroyed by then machines. She remarks, “Zion was stuck in the past. Stuck in war. Stuck in a Matrix of its own. They believed that it had to be us or them [the machines]. This city was built by us and them.” (My own italics).

As Niobe, scholars like Manuel Castells (2023) proposes that the debate should not be about “with or without AI” but on “with and without AI”. It is true that as a tool AI can automate many tasks, carrying the risk of destroying jobs in many areas: management, lawyers, art, science, etc., which could have negative social and economic consequences. But it also true that AI has arrived to stay.

Following this line of thinking I have only one final reflection: artificial intelligence will certainly disrupt science and research in many ways, some positive and some negative, but researchers and scientists will not disappear, the difference will be researchers that work with and without AI.

*ChatGPT used the concept of “Natural disasters” in this example, which is clearly a bias in my opinion. But this is probably because the data used to train ChatGPT have these flaws. As the same ChatGPT put it: “For example, if it contains stereotypes or discriminatory language, then ChatGPT’s output may also be biased.”

Vicente Sandoval is Research Associate at the Katastrophenforschungsstelle (Disaster Research Unit), Freie Universität Berlin. Consultant and researcher on urban disaster risk governance with interests on evidenced-based research, radical interpretations of disasters, disaster vulnerability, and climate-resilient urban development. More: https://fu-berlin.de/x6m8f13

References

Castells, Manuel. (2023): ChatGPT. In: La Vanguardia, 25 Feb. 2023. Available online at https://www.lavanguardia.com/opinion/20230225/8782438/chatgpt.html checked on 03 March 2023.

Wachowski, Lana. (Director). (2021): The Matrix Resurrections. Warner Bros. Pictures.